Stanford University has released the latest AI Index Report

The seventh edition of the AI Index Report has been published. Unlike previous editions, the 2024 AI Index Report extends coverage of relevant trends and features a newly included chapter on science and medicine.

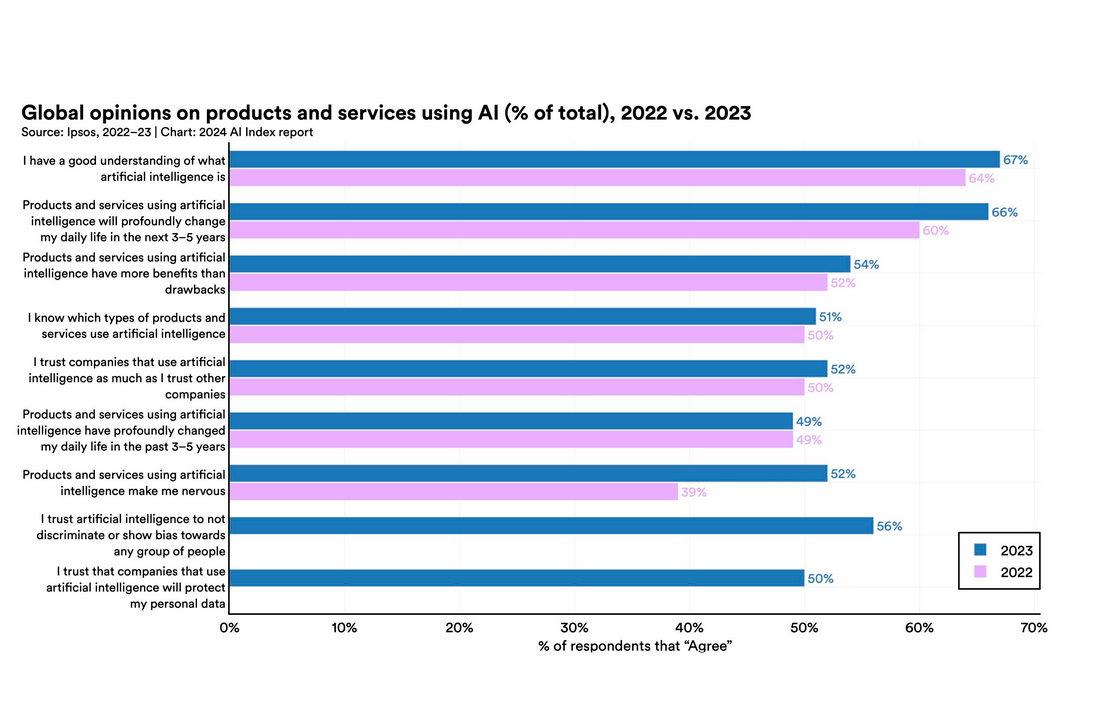

The seventh edition of the AI Index Report went live recently, bringing an in-depth analysis of last year's most relevant AI trends, "at an important moment when AI’s influence on society has never been more pronounced." Unlike previous versions of the report, this year's edition features extended coverage of relevant trends, including technical advancements in AI, public perceptions of the technology, and the geopolitical dynamics surrounding its development. Moreover, the seventh edition of the AI Index Report also includes a novel chapter on the impact of AI in science and medicine.

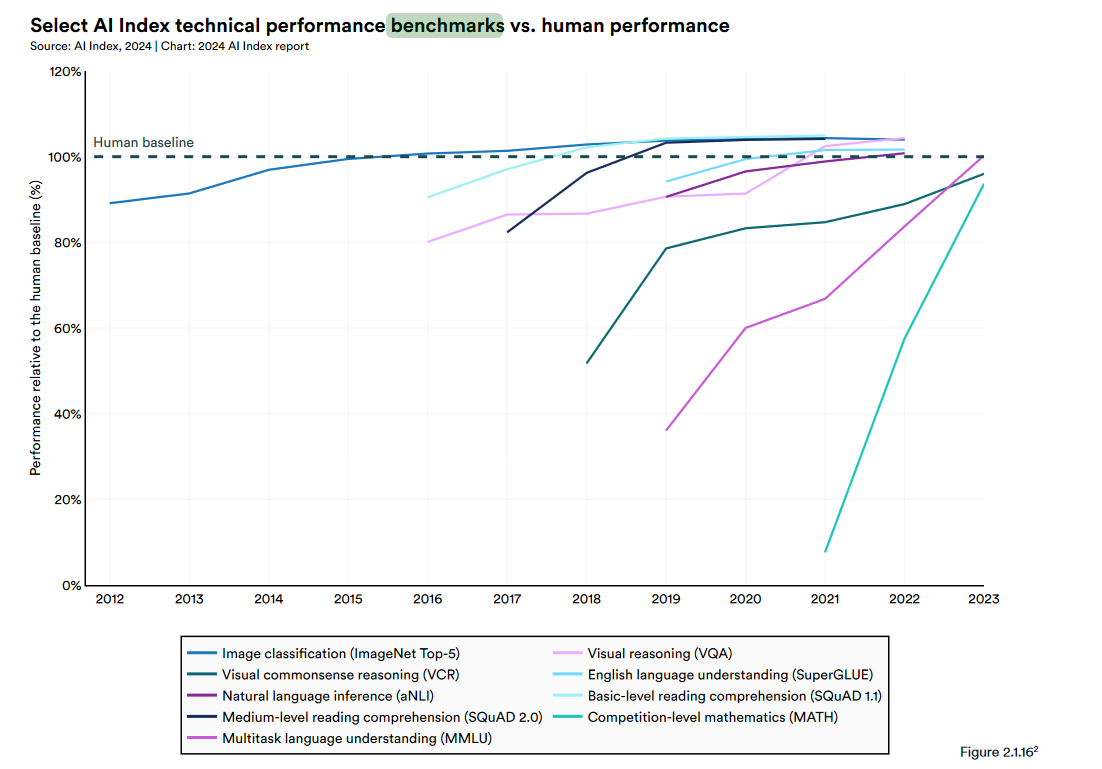

The report's key takeaways include the (repeated) confirmation of some facts and trends that have become increasingly prominent in public discussions centered on AI and its societal impact. For instance, in a rather ironic turn of events, the report finds that even if AI surpassed the human baseline for some image classification, visual reasoning, and English understanding benchmarks, it still has some way to go before it achieves comparable performance levels in other tasks, including competition-level mathematics, visual commonsense reasoning, and planning. However, this seems to have a limited impact on the public perception of AI, with the data showing that some surveyed populations are becoming increasingly concerned with, rather than excited about AI. Mixed feelings about AI are also reflected in the workplace, as the report finds that while it may boost productivity and even contribute to closing the low vs high skill gap, some studies suggest that, without appropriate oversight, AI can negatively impact performance.

There also seems to be a vicious circle in the making, where frontier models are getting increasingly expensive to train. According to the report, OpenAI spent about $78 million in computing to train GPT-4, while Gemini's training cost around $191 million in computing. It comes as no surprise that fund-raising profit-churning private research firms are producing over three times as many frontier models as academic research teams, even if academia-industry collaborations are becoming more frequent. Conversely, given their ability to leverage and profit from the groundbreaking fruits of their labor, private research firms are raking in considerable amounts of funding, even if smaller generative AI startups are also profiting from this trend. Collaborations between academia and industry may not bring home the big bucks, but they are undoubtedly drivers of scientific progress given that, in general, AI has been accelerating scientific advances.

Many models originate in US-based institutions, correlating nicely with the increase in AI regulations in the United States. The number sharply rose from one in 2016 to 25 in 2023. Moreover, the US may be leading a global trend in which governments, lawmakers, and other policymakers are scrambling to keep up with a dynamic ever-evolving ecosystem. Still, it may also be happening that an increase in high-level policies and regulations has forced everyone to acknowledge a similarly concerning fact: lower-level evaluation frameworks are just as lacking as global policies were a couple of years ago. The report found that since there is no standard for responsible AI reporting, private vendors tend to evaluate their models against different responsible AI benchmarks, which complicates and obscures any attempt at external safety evaluation or comparison. Fortunately, standardized frameworks for evaluation are slowly taking hold, which may yield a different picture of this issue soon.

The 2024 AI Index Report is an invaluable resource and a neutral, thoroughly researched, broadly sourced guide to the progressively complex and fast-moving AI ecosystem. The full report is available here.